Top SEO mistakes are often overlooked but can severely impact your site's performance. This guide reveals the biggest SEO traps in 2025 and how to avoid them effectively.

Top SEO Mistakes That Could Be Hurting Your Website in 2025

Top SEO mistakes are often subtle but powerful enough to hold your website back from ranking where it should. With search engines evolving constantly, small missteps in strategy or site structure can lead to big performance drops.

In this guide, we’ll break down the most common SEO errors businesses make and how they impact search visibility, user experience, and long-term growth. Whether you’re running a blog, eCommerce site, or local service page, fixing these issues is key to unlocking consistent, organic traffic.

Why SEO Still Matters in 2025

SEO still matters in 2025 because organic traffic remains one of the most consistent, cost-effective sources of visitors for websites. Unlike paid ads that stop bringing in traffic the moment you pause them, a well-optimized site continues to attract searchers day after day. This long-term compounding effect is why top-performing sites prioritize SEO as a core part of their digital strategy.

Even as search engines become more sophisticated with AI and machine learning, the fundamentals of SEO still apply. Google still relies heavily on content relevance, website structure, mobile compatibility, and backlink quality to determine rankings. A page that loads fast, answers user intent clearly, and is easy to navigate will still outperform a bloated or poorly optimized competitor.

For example, a local plumber in Virginia with a fast, keyword-optimized mobile site can rank ahead of national chains by focusing on hyperlocal SEO signals and Google Business Profile optimization. Similarly, an eCommerce brand can outrank larger competitors by creating category pages that target long-tail queries with informative, structured content.

Ignoring SEO in 2025 means handing over visibility to competitors who invest in content optimization, technical performance, and user experience. Search behavior hasn’t gone away—it’s just gotten smarter, and successful businesses are adapting their SEO to match.

Confusing SEO with Short-Term Hacks

Many site owners fall into the trap of treating SEO like a set of quick tricks rather than a long-term strategy. This mindset leads to a cycle of chasing the latest loophole or gimmick instead of building sustainable authority and trust with search engines.

- Buying low-quality backlinks in bulk for fast ranking gains

- Stuffing keywords unnaturally into content without context

- Spinning articles with AI tools and publishing them at scale

- Using clickbait titles that don’t match the page’s content

- Cloaking content or using sneaky redirects to game the algorithm

These shortcuts might offer a brief spike in traffic but usually result in penalties or lost rankings once search engines catch on. Real SEO requires consistency, helpful content, and user-first experiences—not shortcuts that undermine your site’s credibility over time.

Ignoring Search Intent in Your Content Strategy

One of the most damaging SEO mistakes is publishing content that doesn’t match what users are actually searching for. Search intent refers to the reason behind a query—whether someone is looking to buy, learn, compare, or navigate to something specific. When your content doesn’t align with that intent, Google is unlikely to rank it well, no matter how optimized it appears on the surface.

- Writing blog posts for transactional keywords like “buy running shoes”

- Creating product pages targeting informational queries like “how to tie running shoes”

- Targeting navigational keywords with generic content that doesn’t guide users

- Misreading commercial intent and failing to offer comparisons or reviews

- Forcing promotional content onto pages meant to answer questions

When you ignore search intent, users quickly bounce from your site because they don’t find what they need. Google sees these signals and pushes your page down in the rankings. Understanding the different types of intent—informational, navigational, transactional, and commercial—can drastically improve how your content performs in search.

Overlooking Technical SEO Fundamentals

Technical SEO is the foundation that allows your content and keywords to be seen, indexed, and ranked by search engines. When the technical aspects of a site are broken or ignored, even the best content can get buried. Search engines rely on clean code, structured data, and crawlable architecture to understand and prioritize your pages.

Common issues include missing or incorrect canonical tags, broken internal links, poor mobile responsiveness, and pages blocked by robots.txt. For example, a blog post might be fully optimized but won’t show up in search if it’s excluded from the sitemap or disallowed in the site’s robots file. These technical oversights are invisible to most users but highly visible to search engines.

Another frequent issue is slow server response time or large page sizes, which negatively affect crawl budget and user experience. If your page takes too long to load, Googlebot may stop crawling before reaching all your content. Compressing images, enabling caching, and minimizing unnecessary scripts are simple fixes that can improve both speed and rankings.

Ignoring technical SEO leads to wasted resources and missed opportunities. Even small technical errors can compound over time and lower your entire site’s performance in the search results. Regular audits and monitoring tools like Google Search Console can help uncover these problems early.

Failing to Optimize for Core Web Vitals

Core Web Vitals are essential performance metrics Google uses to evaluate user experience on your website. These include loading speed, interactivity, and visual stability. Ignoring these metrics can lead to lower rankings, especially on mobile, where performance often suffers the most.

- Large images or videos that delay Largest Contentful Paint (LCP)

- Unoptimized JavaScript blocking First Input Delay (FID)

- Layout shifts that affect Cumulative Layout Shift (CLS)

- Too many third-party scripts reducing overall page performance

- Lack of server-side caching causing inconsistent load times

When your site performs poorly on Core Web Vitals, users are more likely to leave before engaging with your content. Search engines notice this behavior and deprioritize pages that create frustration. Prioritizing these metrics ensures a smoother experience and stronger ranking signals.

Keyword Stuffing and Over-Optimization

Keyword stuffing is the outdated practice of loading a webpage with the same target keyword repeatedly in an attempt to manipulate search engine rankings. While this may have worked years ago, modern algorithms can detect unnatural keyword use and penalize sites for it. Pages that are over-optimized often feel robotic and offer little actual value to readers.

For example, writing a sentence like "Our best SEO company offers SEO services for SEO clients who need SEO strategies" is a clear case of keyword stuffing. Instead of improving visibility, this kind of repetition makes content hard to read and signals to Google that the content might be manipulative or spammy.

Over-optimization can also include forcing exact match anchor text in every internal link or optimizing every heading with the same keyword variation. These tactics don’t help rankings and often backfire by reducing trust. Using natural language, semantic variations, and focusing on clarity will always perform better over time.

A balanced SEO strategy prioritizes readability, relevance, and genuine user interest. When you aim to inform instead of manipulate, your content becomes more useful and better aligned with what search engines want to deliver.

Neglecting Mobile-First Indexing Requirements

| Mobile Mistake | Impact on SEO | Example Scenario |

|---|---|---|

| Desktop-only content | Missing from search index | Product descriptions only visible on desktop |

| Poor mobile layout | High bounce rate, lower rankings | Text overlaps, buttons too small to tap |

| Slow mobile load times | Reduced visibility in mobile search | No compression or lazy loading for images |

Mobile-first indexing means Google predominantly uses the mobile version of your site for ranking and indexing. If your mobile version is incomplete, broken, or missing important content, that information likely won’t show up in search results. Sites that ignore mobile usability are at a serious disadvantage as the majority of traffic continues to shift to mobile devices. Prioritizing responsive design, performance, and accessibility is now essential—not optional.

Creating Thin or Duplicate Content

Thin content refers to pages that offer little to no value to the user, often containing fewer than 300 words with no unique insights or information. These pages may exist just to target a keyword, but they fail to satisfy search intent or keep users engaged. Search engines prioritize depth, usefulness, and originality, so thin content rarely performs well in rankings.

Duplicate content is another common issue, where identical or nearly identical text appears across multiple pages of a site or is copied from external sources. For example, eCommerce sites often reuse manufacturer descriptions across hundreds of product pages without adding unique content. This makes it difficult for Google to determine which version to rank and can dilute overall site authority.

Sites that rely heavily on thin or duplicate pages often suffer from poor indexing, low dwell time, and decreased trust from both users and search engines. To avoid these problems, every page should serve a clear purpose, provide value beyond the obvious, and offer something that can't be found elsewhere. Quality beats quantity when it comes to sustainable SEO.

Using Outdated SEO Tactics That No Longer Work

Many websites still rely on tactics that were once effective but are now considered outdated or even harmful. Search engines have evolved dramatically, and strategies that once boosted rankings can now trigger penalties or make your content irrelevant. Holding onto these old habits prevents your site from aligning with modern algorithm standards.

- Submitting your site to hundreds of generic web directories

- Creating dozens of doorway pages targeting slight keyword variations

- Using exact match anchor text in every backlink

- Hiding keywords in white text or off-screen divs

- Posting spun or low-quality articles to content farms

Clinging to these outdated methods can hurt your visibility and damage your site’s credibility over time. Google rewards quality, relevance, and user-focused experiences, not gimmicks or manipulative shortcuts. Staying up to date with current SEO practices is essential for long-term growth.

Skipping Title Tag and Meta Description Optimization

| Mistake | Effect on Performance | Example Scenario |

|---|---|---|

| Missing or duplicate title tags | Lower rankings and poor relevance | Blog posts with identical titles across pages |

| Generic or blank meta descriptions | Reduced click-through rates | Pages displaying auto-generated text snippets |

| Overstuffed with keywords | Looks spammy, hurts user trust | Titles like “Best SEO SEO SEO Tips Free Guide” |

Title tags and meta descriptions are often the first impression users get in search results, and skipping their optimization is a missed opportunity. A compelling title that includes relevant keywords and a clear meta description can significantly boost your click-through rate. Search engines also use these elements to understand context, so neglecting them can hurt both rankings and visibility. Consistent, well-crafted metadata supports both technical SEO and user engagement.

Poor URL Structure and Slug Formatting

A clean, readable URL is not just good for users—it also helps search engines better understand and rank your content. Poorly formatted URLs that contain long strings of random numbers, special characters, or irrelevant words can confuse both crawlers and people. An example of a bad URL is www.example.com/page.php?id=4532&ref=abc, which gives no clear indication of the content’s topic.

Instead, a clear slug like www.example.com/seo-mistakes-to-avoid is short, keyword-rich, and descriptive. Good slugs reinforce the page’s intent and help with indexing, especially when paired with a logical URL structure that reflects the site hierarchy. Consistency is key—random capitalization, excessive word length, or filler terms like "the" or "and" can make slugs harder to read and less impactful.

Another mistake is changing URLs without proper redirects, which results in broken links and lost rankings. Every URL adjustment should be carefully managed with 301 redirects to preserve equity and ensure a smooth experience. Whether you're launching a new site or updating old content, paying attention to your URL structure can make a measurable difference in your SEO performance.

Not Using Internal Linking Effectively

| Internal Linking Issue | Impact on SEO | Example Scenario |

|---|---|---|

| No links to key pages | Reduced visibility and low crawl rate | Homepage doesn’t link to service or product pages |

| Irrelevant anchor text | Weak context signals for search bots | Linking with “click here” instead of topic keywords |

| Excessive links on one page | Diluted authority and confusion | 100+ random links on a single blog post |

Effective internal linking helps search engines discover and understand the structure of your site while distributing authority to important pages. When done right, it improves crawlability, keeps users engaged, and supports stronger rankings across your domain. Ignoring or misusing internal links can isolate valuable content and make your site harder to navigate, both for bots and visitors. A strategic internal linking approach improves both visibility and user experience.

Over-Relying on Backlinks Without Content Quality

Backlinks remain a critical ranking factor, but they are not a magic solution without strong content to support them. Many site owners invest heavily in link-building while neglecting to create valuable, relevant, and original material. This creates an imbalance where external signals suggest authority, but the page itself fails to deliver a satisfying user experience.

For example, a blog post with ten high-authority backlinks might still underperform if the content is shallow, outdated, or poorly formatted. Google’s algorithms look at both the quality of backlinks and the substance of the content they point to. If the content lacks depth or relevance, those links lose value in the ranking equation.

Effective SEO requires a balance between off-page and on-page efforts. Quality backlinks can enhance visibility, but only when they lead to trustworthy, well-structured pages that match search intent. Relying too heavily on backlinks without investing in content creates a weak foundation that won’t hold up long term.

Ignoring Image Optimization and Alt Attributes

Images are often overlooked in SEO, but they play a critical role in both page performance and accessibility. When images are uncompressed, oversized, or incorrectly formatted, they slow down load times and negatively impact Core Web Vitals. A page with five 5MB images can significantly delay loading, especially on mobile connections, leading to higher bounce rates and lower rankings.

Alt attributes are equally important for search engine understanding and user accessibility. Without descriptive alt text, images are invisible to screen readers and can’t be properly indexed by Google Image Search. For example, instead of using an empty or vague alt tag like alt="image1", a better version would be alt="Red Nike Air Max sneakers on white background".

Optimizing images includes compressing file sizes, using responsive formats like WebP, and writing meaningful alt text that aligns with the content. This helps improve performance, keyword relevance, and user experience. Ignoring these basic steps not only hurts SEO but also excludes users who rely on screen readers to navigate the web.

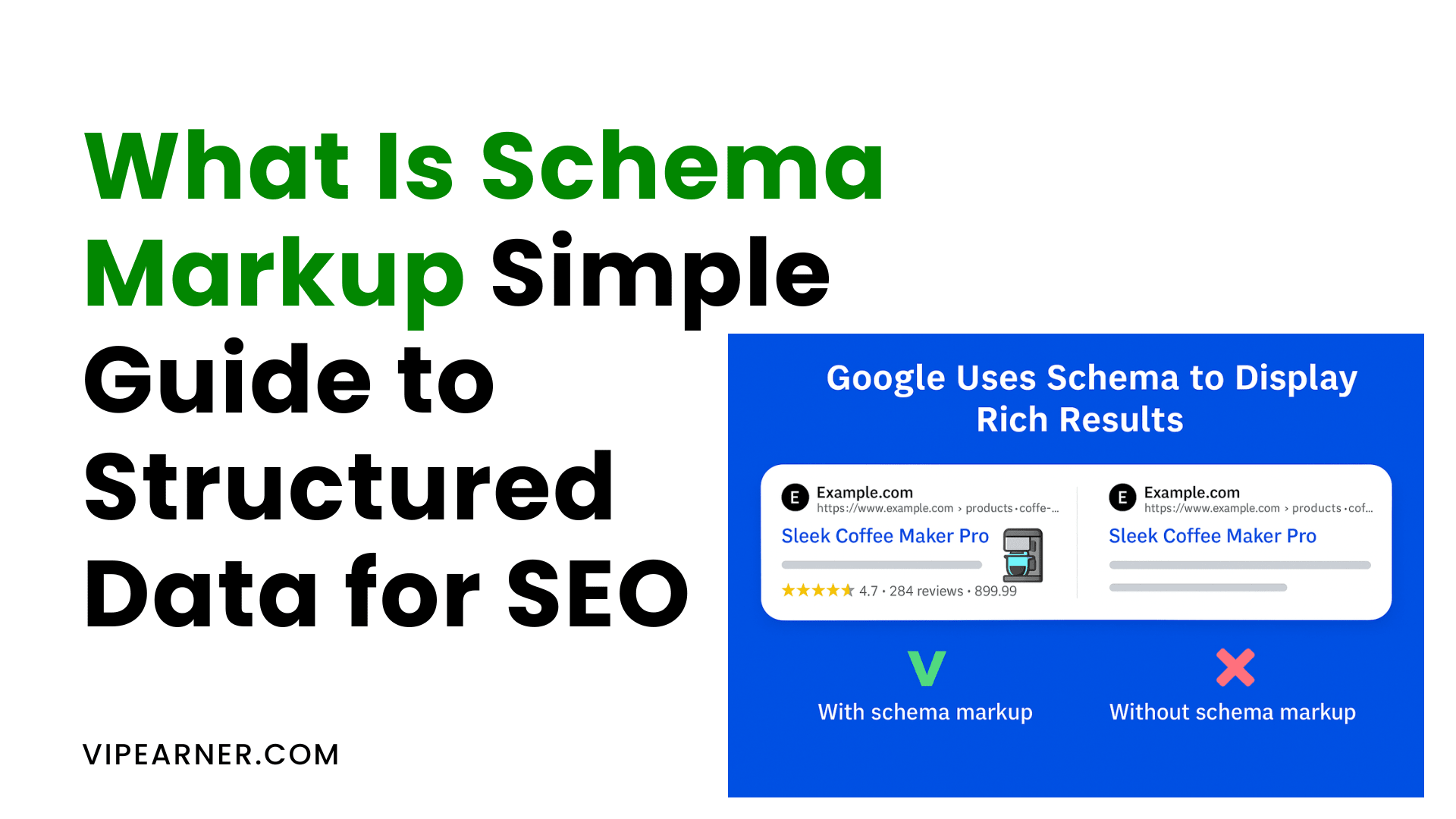

Neglecting Schema Markup and Structured Data

Schema markup is a form of structured data that helps search engines better understand the context of your content. When implemented correctly, it can enhance how your pages appear in search results through rich snippets, such as star ratings, product information, event dates, and more. Without it, your listings may look plain and fail to attract clicks even if you rank well.

For example, a recipe page without schema might just show the title and URL, while a competitor using structured data displays cooking time, ratings, and a thumbnail image directly in the search result. This additional information increases visibility and drives higher engagement. Similarly, local businesses can benefit from LocalBusiness schema to highlight address, hours, and reviews.

Neglecting structured data means missing out on these enhanced listings and signals that help Google understand your site better. It doesn’t directly affect rankings, but it improves click-through rates and supports broader SEO efforts. Tools like Google’s Rich Results Test can help identify gaps and opportunities for adding schema to your content.

Letting Crawl Errors and Broken Links Pile Up

Crawl errors and broken links are often ignored until they start affecting site performance and visibility in search results. When search engine bots encounter errors like 404 pages, server issues, or redirect loops, they may stop indexing parts of your site. Over time, these problems reduce crawl efficiency and can lead to lost ranking opportunities.

For example, an old blog post that links to a now-deleted product page can return a 404 error, creating a poor user experience and a dead end for search engines. Multiply this by dozens or hundreds of links, and your site begins to look unmaintained or untrustworthy. Broken internal links also disrupt the flow of link equity, weakening the overall authority passed through your pages.

Regular audits using tools like Google Search Console or Screaming Frog help identify and fix crawl issues before they grow into larger problems. By repairing broken links, setting up proper redirects, and keeping your sitemap updated, you maintain a healthy technical foundation. A clean crawl profile signals to search engines that your site is reliable and worth indexing thoroughly.

Publishing Without an SEO Content Strategy

Publishing content without a clear SEO strategy is one of the fastest ways to waste time and resources. Random blog posts or landing pages that aren’t aligned with search demand, user intent, or keyword research often fail to attract organic traffic. Without structure, even high-quality writing can go unnoticed by search engines and potential readers.

For example, a fitness website might publish great content on nutrition and exercise but miss key ranking opportunities by not targeting long-tail keywords like “15-minute beginner workouts at home.” Instead of attracting new visitors through specific queries, the content blends into a sea of generic material. A proper strategy would group related topics, map keywords to search intent, and guide users through a content funnel.

An SEO content strategy involves planning topics based on keyword gaps, competitor analysis, and user behavior. It ensures that every piece of content contributes to your site’s visibility, authority, and business goals. Publishing without this foundation makes growth unpredictable and leaves too much to chance.

Not Updating or Republishing Old Content

Old content that once ranked well can slowly lose visibility as newer, more relevant pages enter the search results. Algorithms favor fresh, accurate, and up-to-date information, especially for time-sensitive topics like tech trends, health advice, or marketing strategies. Leaving content untouched for years can signal to Google that it’s outdated or no longer useful.

For example, a blog post from 2018 titled “Top Instagram Tips for Businesses” might reference features that no longer exist or skip newer tools like Reels. Even if it once ranked highly, users searching in 2025 expect current insights. Republishing that same post with updated tips, statistics, and examples can revive its visibility and relevance.

Updating content involves more than just changing a date. It means reviewing the structure, refreshing internal links, optimizing for current keywords, and improving clarity or readability. When done consistently, this approach helps preserve existing rankings, boost underperforming pages, and extend the lifespan of your content investment.

Ignoring Local SEO for Brick-and-Mortar Businesses

Local SEO is critical for businesses that rely on in-person visits, such as restaurants, dental clinics, gyms, and retail stores. When these businesses fail to optimize for local search, they miss out on nearby customers actively searching for their services. Local intent is strong, and users looking for “plumber near me” or “best pizza in Richmond” expect fast, relevant results.

A common mistake is not claiming or optimizing a Google Business Profile. Without it, a business won’t appear in the map pack or local search listings, which can lead to massive visibility loss. Other oversights include inconsistent name, address, and phone number (NAP) data across directories, no location-specific pages, and missing reviews or local keywords.

For example, a pet grooming shop that doesn’t include its city name on service pages may rank poorly for searches like “pet groomer in Arlington.” Competitors who do use local schema, targeted content, and citation building will outrank them. Local SEO connects physical businesses with real-time online search demand in their service area.

Ignoring this channel means leaving traffic and revenue on the table, especially as mobile searches grow and users rely more on localized results. Brick-and-mortar businesses that invest in local SEO can consistently appear in front of nearby customers ready to take action.

Failing to Optimize for Voice Search and Conversational Queries

Voice search has changed the way people interact with search engines, favoring natural, conversational phrases over short keywords. Instead of typing “best running shoes 2025,” users might ask, “What are the best running shoes for flat feet this year?” Content that doesn’t account for these longer, question-based queries may fail to appear in voice-driven results.

A key mistake is creating pages that only target short, transactional phrases and ignore how people speak. Voice queries often begin with who, what, when, where, why, or how, and they expect direct, concise answers. For example, a travel site that only targets “cheap flights Miami” might miss traffic from queries like “How can I find the cheapest flights to Miami in summer?”

Optimizing for voice means using a more conversational tone, integrating FAQ sections, and structuring answers clearly within the content. It also involves targeting long-tail keywords and featured snippet opportunities. By adapting to how people naturally ask questions, websites can capture a growing segment of mobile and smart speaker users.

Overlooking Site Speed and Performance Issues

Site speed is a direct ranking factor and a major component of user experience. Slow-loading pages increase bounce rates, reduce time on site, and make visitors less likely to return. Google prioritizes pages that load quickly, especially on mobile devices where slow speeds are more noticeable.

Common culprits include uncompressed images, excessive JavaScript, lack of browser caching, and poorly optimized hosting environments. For example, a homepage filled with full-resolution images and autoplay videos may look appealing but could take over 10 seconds to load. Users typically won’t wait that long, and Google will respond by pushing faster competitors ahead in the rankings.

Performance tools like Google PageSpeed Insights and GTmetrix can help diagnose speed issues and suggest improvements. Fixes such as enabling lazy loading, reducing server response time, and using modern image formats like WebP can have a major impact. Improving load time isn’t just technical maintenance—it’s a strategic move that supports both SEO and overall user satisfaction.

Forgetting to Submit XML Sitemaps and Robots.txt Updates

Submitting an XML sitemap helps search engines discover and index the pages on your website more efficiently. Without one, important content may be missed or take longer to appear in search results, especially on large or newly launched sites. An up-to-date sitemap tells search engines what to crawl and when it was last modified, improving overall visibility.

The robots.txt file, on the other hand, controls which areas of your site bots can or cannot access. Misconfigured rules or forgotten updates can unintentionally block critical pages from being crawled. For example, a disallow rule applied to /blog/ would prevent search engines from indexing all blog posts, even if the content is high quality and fully optimized.

Neglecting these foundational tools can lead to incomplete indexing, wasted crawl budget, and reduced organic reach. Keeping your sitemap accurate and ensuring your robots.txt reflects your current SEO goals allows search engines to better understand and prioritize your content. Regular checks and updates ensure these files support your SEO strategy instead of working against it.

Targeting Keywords Without Volume or Intent

Targeting keywords that have no search volume or the wrong user intent is a common SEO misstep that wastes time and effort. Just because a keyword sounds relevant doesn’t mean people are actively searching for it. Creating content around these low-value terms results in pages that rarely attract traffic or rank for anything meaningful.

For example, targeting a phrase like “marketing improvement insights 2025 guide” might seem specific, but if it gets zero monthly searches and has unclear intent, it won’t produce results. In contrast, a slightly broader but high-intent phrase like “best marketing trends for 2025” will have actual demand and attract users who are actively looking for insights. Understanding search behavior is key to choosing the right targets.

Intent is just as important as volume. Writing a blog post for a transactional keyword like “buy email marketing software” and filling it with general tips will misalign with what users expect. Google will favor pages that directly match the commercial nature of the query. Good keyword strategy requires aligning content with what users want and ensuring those queries have the potential to deliver consistent traffic.

Ignoring Analytics and Search Console Data

Failing to monitor Google Analytics and Search Console means missing out on crucial insights that drive SEO success. These tools provide real-time feedback on how users interact with your site and how search engines view your content. Without this data, it’s nearly impossible to identify what’s working, what’s underperforming, and where opportunities exist.

For example, Search Console might reveal that a blog post ranks on page two for a high-volume keyword, suggesting it could benefit from improved internal linking or content updates. Analytics could show a high bounce rate on a landing page, indicating that the content doesn’t match user intent or loads too slowly. These metrics help diagnose and fix problems before they grow.

Relying on guesswork rather than actual performance data leads to inefficient content strategies and missed ranking potential. By reviewing impressions, click-through rates, and user behavior regularly, you can adjust your SEO efforts based on facts rather than assumptions. Consistent tracking ensures every decision is guided by insight, not intuition.

Letting SEO Become an Afterthought Instead of a Foundation

Many websites treat SEO as something to apply after a page is built, rather than integrating it from the start. This reactive approach often leads to missed keyword opportunities, technical flaws, and content that doesn’t align with user search behavior. When SEO is added as a final step, it becomes more about surface-level tweaks than building real visibility.

For example, a company might launch a new service page with strong visuals and branding, only to realize later that the headline, URL, and copy contain none of the terms users actually search for. Retroactively inserting keywords often disrupts flow and rarely performs as well as content created with SEO in mind. By then, fixing technical issues like crawl depth or URL hierarchy may require a costly redesign.

Strong SEO begins at the planning phase—with keyword research, intent mapping, site architecture, and content strategy all working together. When built into the foundation, SEO guides everything from copywriting to internal linking to page speed. This approach sets the stage for long-term rankings, sustainable traffic, and fewer issues down the line.

Overusing AI Tools Without Human Editing

AI content tools can speed up content creation, but relying on them without proper editing often leads to generic, repetitive, or factually incorrect material. Search engines reward originality, clarity, and depth—qualities that raw AI output doesn’t always deliver. Publishing unedited AI content increases the risk of keyword stuffing, inconsistent tone, and inaccurate information.

For example, an AI-generated product review page might repeat the same vague statements across multiple items, such as “This is a great product for everyone.” Without human input, it lacks unique insights or real value for readers. Search engines recognize low-quality patterns and may downrank pages that feel auto-generated or thin.

AI can be a powerful tool for drafting outlines, generating ideas, or speeding up first drafts, but final content still needs a human touch. Editors add voice, context, structure, and accuracy—elements that elevate the content beyond automation. When used thoughtfully, AI supports SEO; when overused without review, it becomes a liability.

Not Monitoring Competitor SEO Strategies

Failing to keep an eye on your competitors' SEO efforts leaves you blind to industry shifts, missed opportunities, and changing search behavior. Competitor analysis reveals what content is ranking, which keywords are driving traffic, and how others are adapting to algorithm updates. Without this insight, your strategy risks falling behind more proactive players.

- Ignoring which keywords competitors are ranking for and targeting

- Overlooking how often they update or expand top-performing pages

- Missing new content formats like comparison tables, FAQs, or videos

- Failing to track backlink growth and link-building campaigns

- Not analyzing changes in their site structure, speed, or UX

Keeping tabs on competitors helps you identify gaps in your own strategy and adapt faster to trends. Tools like Ahrefs, Semrush, or even manual searches can uncover valuable patterns. Monitoring doesn’t mean copying—it means learning from what’s working and using that information to sharpen your own edge.

Ignoring User Experience and Engagement Signals

| UX Issue | Negative Impact on SEO | Example Scenario |

|---|---|---|

| Cluttered or confusing layout | Higher bounce rate | Visitors leave homepage within seconds due to overload |

| Hard-to-read text or poor design | Lower dwell time | Light gray text on white background causes eye strain |

| Aggressive pop-ups or autoplay | Reduced engagement and satisfaction | Users exit when a video starts playing without prompt |

Search engines track behavioral metrics like bounce rate, time on site, and click-through rate to assess how users interact with your pages. Poor design choices or frustrating experiences signal that content may not be valuable or trustworthy. When users don’t engage, rankings tend to slip. Prioritizing clean design, readability, and intuitive navigation improves both SEO and visitor satisfaction.

Failing to Build E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)

| Missing E-E-A-T Element | Impact on SEO | Example Scenario |

|---|---|---|

| No author bios or credentials | Low credibility in YMYL content | Health article published without showing medical expertise |

| Lack of real-world experience | Weak relevance and engagement signals | Financial advice written by someone with no industry background |

| No external trust signals | Reduced authority and ranking potential | No backlinks, reviews, or mentions from reputable sources |

Google evaluates E-E-A-T to determine whether your content is reliable, accurate, and safe for users, especially in sensitive niches like health, finance, and legal. Without clear signals of expertise or trust, even well-written content may struggle to rank. Building E-E-A-T involves showcasing experience, citing reliable sources, using real author profiles, and earning recognition from other trusted sites. Demonstrating these factors supports both SEO and user confidence.

Assuming One SEO Strategy Fits Every Website

Treating SEO as a one-size-fits-all process ignores the unique goals, audiences, and challenges each site faces. What works for an eCommerce store won’t necessarily apply to a local service provider, a SaaS company, or a news blog. SEO strategy should be tailored to the business model, search intent, and competition level of the niche.

- Applying the same keyword tactics to both B2B and B2C websites

- Using product-style schema markup on service-based sites

- Prioritizing backlinks for a local business instead of local citations

- Ignoring content freshness for a news site that needs constant updates

- Treating informational blogs the same as conversion-focused landing pages

Each website requires its own SEO roadmap built around its structure, goals, and user behavior. Copying someone else's formula can lead to missed opportunities or wasted effort. A customized strategy always outperforms a generic checklist.

Depending on Plugins Without Manual Checks

| Plugin Dependency Issue | SEO Risk | Example Scenario |

|---|---|---|

| Relying on default settings | Missed optimization opportunities | SEO plugin generates generic meta descriptions |

| Assuming automatic schema is correct | Misleading or incomplete structured data | Plugin marks all posts as “Article” without specifics |

| Skipping content reviews | Low-quality or poorly optimized pages | Trusting readability scores without manual proofreading |

SEO plugins are helpful tools, but they can't replace critical thinking or human oversight. Blindly trusting automation leads to errors that go unnoticed—errors that can hurt rankings or misrepresent content. Manual checks ensure your titles, schema, and keyword use are aligned with intent and best practices. Balancing automation with hands-on review keeps your SEO both efficient and accurate.

Not Running Regular Site Audits and Health Checks

Skipping regular SEO audits allows technical issues, content decay, and performance problems to accumulate over time. What starts as a few broken links or slow-loading pages can quickly turn into widespread visibility loss. Audits are essential for uncovering hidden errors that aren’t obvious through day-to-day site use.

For example, a site might unknowingly have dozens of orphaned pages—content that exists but isn’t linked to internally. These pages can’t be properly crawled or indexed, leaving valuable assets invisible in search results. Other issues like duplicate title tags, outdated redirects, or missing alt attributes often go unnoticed without regular reviews.

Tools like Screaming Frog, Ahrefs, or Google Search Console can help automate parts of this process, but manual checks are just as important. Auditing at least quarterly ensures your site stays healthy, aligned with current algorithm standards, and free of technical friction. This proactive maintenance supports long-term SEO stability and prevents slow declines in performance.

Chasing Vanity Metrics Over Business Outcomes

Focusing too heavily on vanity metrics like page views, impressions, or keyword count can distract from what really matters—conversions, leads, and revenue. High traffic means little if visitors don’t take meaningful action on your site. SEO should support business goals, not just inflate analytics dashboards.

For example, a blog post that ranks for a broad keyword like “marketing tips” might bring in thousands of visitors, but if none of them convert or engage, it adds little value. In contrast, a page that targets “marketing software for small law firms” may attract fewer visitors but drive more qualified leads and sales. That type of performance is far more aligned with business outcomes.

Tracking conversions, user journey behavior, and ROI-based KPIs helps ensure your SEO strategy delivers impact beyond surface-level numbers. Aligning efforts with business objectives creates sustainable growth and ensures SEO investments support real-world success.

Misaligning SEO With Business and Marketing Goals

When SEO is disconnected from broader business and marketing objectives, it often results in content that ranks but doesn't convert. Teams may focus on traffic-heavy keywords that generate visits but attract the wrong audience. This disconnect wastes time and resources, while failing to move the business forward in measurable ways.

For example, an enterprise software company might publish articles on general productivity tips that pull in readers but don’t align with their actual service offering. While the blog may see increased traffic, none of those visitors are likely to request demos or contact sales. That’s a clear sign of SEO efforts that lack strategic alignment with revenue goals.

Effective SEO strategies are built around buyer personas, sales funnels, and long-term brand positioning. Every keyword, content piece, and optimization task should serve a purpose beyond visibility—supporting customer acquisition, retention, or brand authority. Aligning SEO with business outcomes creates a cohesive strategy where every effort drives real growth.

Conclusion and How to Fix the Most Common SEO Mistakes

Fixing SEO mistakes starts with awareness and consistent effort - not shortcuts or one-time fixes. Many of the most common issues come from neglecting fundamentals like user intent, technical performance, and content quality. By addressing these areas with a long-term mindset, you create a strong foundation for sustainable growth.

Start by auditing your site regularly for broken links, outdated content, slow load times, and crawlability errors. Update your on-page SEO by focusing on intent-driven keywords, clear meta tags, and structured headings. Review your internal linking, fix thin content, and optimize for mobile performance and Core Web Vitals.

Invest time in understanding your audience, refining your content strategy, and aligning SEO with real business goals. Use tools like Google Search Console, analytics platforms, and competitor research to guide your decisions with data. When SEO becomes part of your ongoing strategy - not an afterthought - you’ll see steady improvements in rankings, traffic, and conversions.