Google Search Console can be overwhelming at first, but it’s one of the most powerful free SEO tools available. This guide walks you through inquiries, video indexing issues, and practical ways to use the data to improve your site.

Technical SEO: Is It Really That Hard for Beginners? (Find Out!)

Technical SEO, the process of optimizing a website's technical aspects to improve search engine visibility, is a crucial foundation for any successful SEO strategy. This comprehensive guide provides beginners with a structured approach to understanding and implementing key technical SEO elements, from site architecture to structured data, ensuring a solid base for improved organic rankings and user experience.

Introduction to Technical SEO

Technical SEO is the foundation of a website's search engine optimization strategy, focusing on improving the technical aspects that enable search engines to crawl, index, and render web pages effectively. It encompasses a wide range of elements, including site speed, mobile-friendliness, crawlability, and indexation 3. By optimizing these technical components, websites can enhance their visibility in search engine results pages (SERPs) and provide a better user experience.

Key aspects of technical SEO include:

- Site architecture and navigation

- XML sitemaps and robots.txt files

- Page speed optimization

- Mobile responsiveness

- Secure connections (HTTPS)

- Structured data implementation

- Resolving crawl errors and broken links

- Eliminating duplicate content

Mastering these elements allows SEO professionals to build a solid foundation for their websites, ensuring that high-quality content has the best chance of ranking well in search results 2.

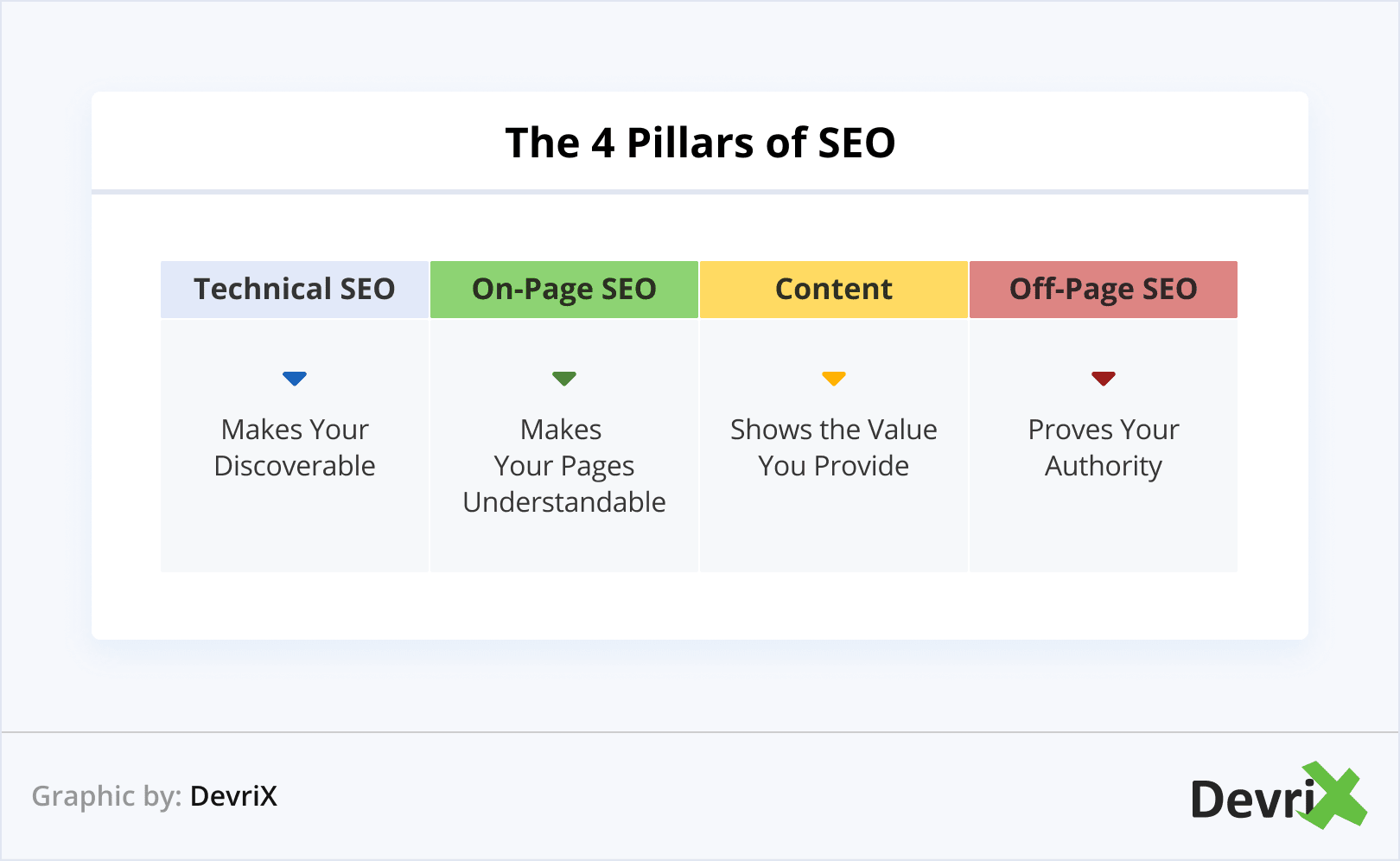

Defining Technical SEO

Technical SEO focuses on optimizing the backend structure and performance of a website to enhance its crawlability, indexability, and overall search engine visibility 2. Unlike on-page SEO, which deals with content optimization and user experience, or off-page SEO, which involves external factors like backlinks, technical SEO addresses the website's infrastructure. Key elements include:

- Site speed optimization: Improving load times through caching, compression, and minification

- Mobile-friendliness: Ensuring responsive design for seamless performance across devices

- URL structure and site architecture: Creating logical hierarchies for efficient crawling

- XML sitemaps and robots.txt: Guiding search engine bots to important pages7

- HTTPS implementation: Securing connections to build trust and improve rankings

- Structured data markup: Enhancing search result appearance with rich snippets

By optimizing these technical aspects, websites can significantly improve their crawlability and indexability, allowing search engines to more effectively discover, understand, and rank their content 8. This foundational work is crucial for maximizing the potential of both on-page and off-page SEO efforts, ultimately leading to better search visibility and organic traffic growth.

The Impact Of Technical SEO

Technical SEO is crucial for website visibility and performance in search engine results. Google's crawling infrastructure relies on efficient site architecture and clean code to effectively discover and index content. By optimizing technical elements like site speed, mobile responsiveness, and structured data, websites can better align with Google's algorithmic requirements and improve their ranking potential.

A compelling case study demonstrates the impact of technical SEO optimization. One travel company saw a 278% increase in organic traffic year-over-year after implementing a comprehensive technical SEO strategy 3. This included resolving underlying technical issues, optimizing content, and improving site architecture. The results highlight how addressing technical SEO factors can lead to significant traffic growth and improved search engine visibility, even in competitive industries.

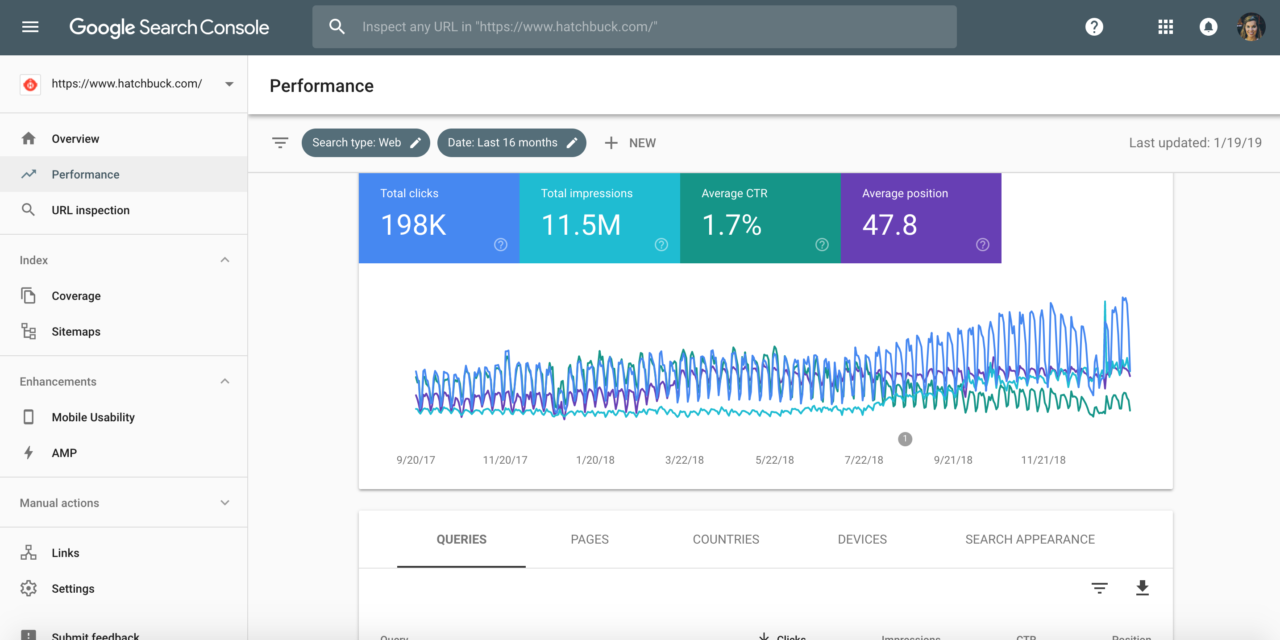

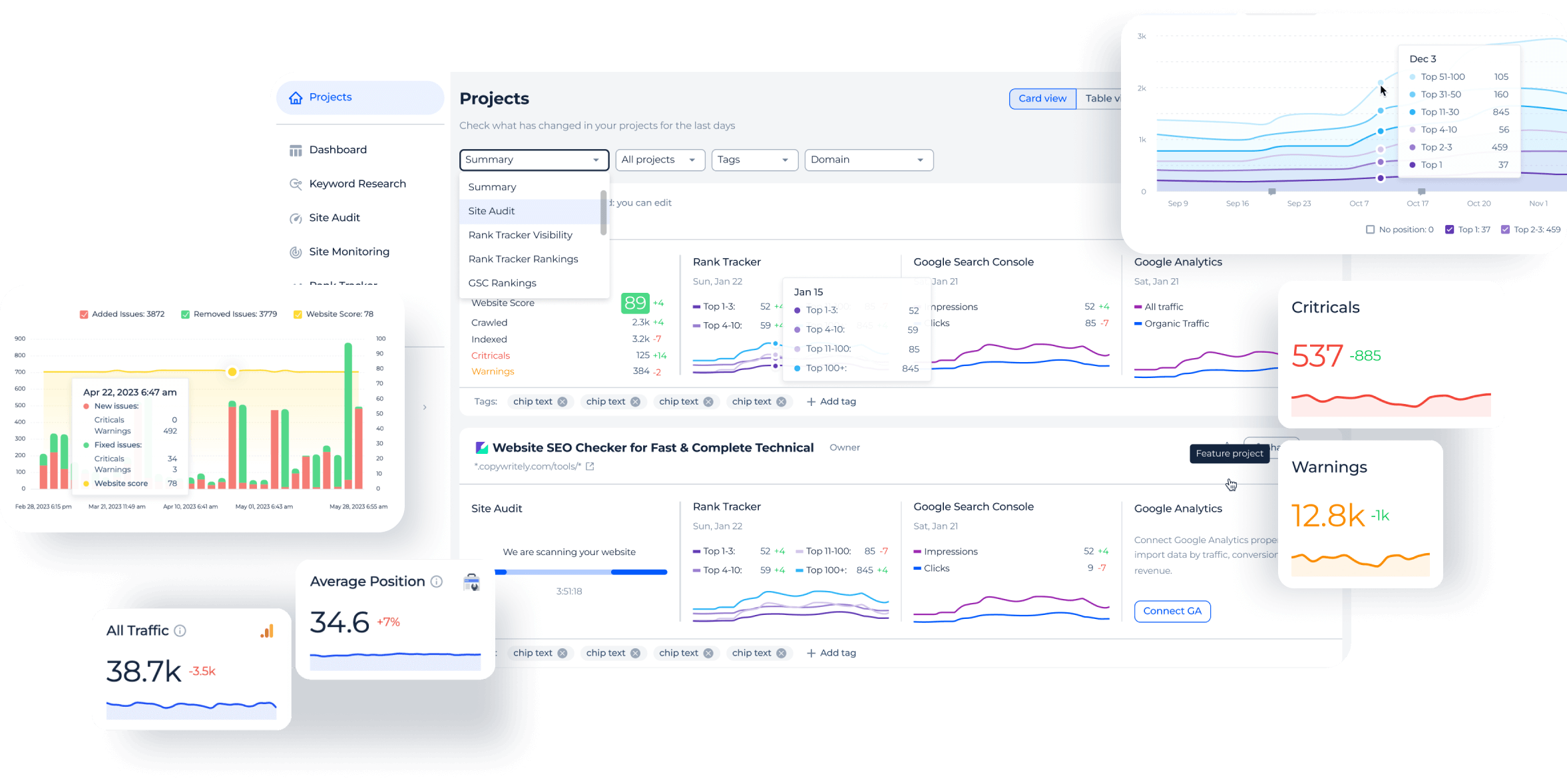

Essential Technical SEO Tools

Technical SEO analysis requires a suite of powerful tools to uncover and address website issues. Google Search Console is essential for monitoring site performance in search results, providing insights on indexing status, crawl errors, and mobile usability 1.

Screaming Frog SEO Spider excels at comprehensive site audits, crawling websites to identify broken links, analyze metadata, and generate XML sitemaps. Google's Lighthouse tool, integrated into Chrome DevTools, offers detailed performance, accessibility, and SEO audits 3.

For competitor analysis, Ubersuggest stands out as an affordable option at $5/month, offering keyword tracking, research, and a SERP score to measure progress 2. It enables users to perform competitor gap analysis by examining top pages by traffic and identifying keyword opportunities.

When combined, these tools provide a robust framework for technical SEO optimization, allowing marketers to improve site structure, performance, and competitiveness in search rankings.

Website Infrastructure Optimization

Website infrastructure optimization is crucial for improving technical SEO performance. A well-structured site architecture enhances crawlability and indexability, leading to better search engine rankings. Key elements include:

- Implementing a clear, logical site structure with a maximum of three clicks from the homepage to any page

- Using a content delivery network (CDN) to distribute assets globally and improve load times

- Optimizing internal linking to create efficient pathways for search engine crawlers

- Regularly updating and adding fresh content to encourage frequent crawling

- Utilizing XML sitemaps to guide search engines to important pages

- Implementing GZIP compression to reduce file sizes and improve page speed

By focusing on these infrastructure optimizations, websites can significantly improve their technical SEO foundation, leading to better crawling efficiency, faster load times, and ultimately, improved search engine visibility and user experience.

Domain and Hosting Essentials

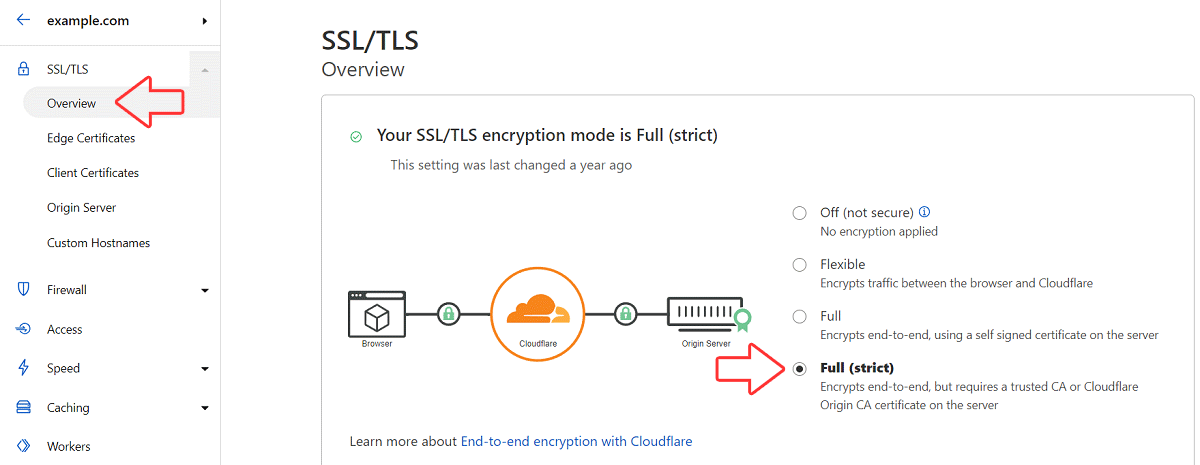

Implementing SSL certificates and optimizing server response time are crucial aspects of technical SEO that can significantly impact website performance and search rankings. When migrating from HTTP to HTTPS, follow a comprehensive checklist to ensure a smooth transition:

- Select and install an appropriate SSL certificate

- Crawl existing website to obtain a list of all URLs

- Update all internal links, canonicals, and hreflang tags to HTTPS

- Implement 301 redirects from HTTP to HTTPS versions

- Update sitemaps and robots.txt files

- Add HTTPS property in Google Search Console

- Monitor analytics and search performance post-migration

To optimize server response time, consider these techniques:

- Invest in robust hosting infrastructure with high uptime guarantees

- Implement caching strategies using tools like Varnish Cache or Redis

- Optimize database queries and regularly fine-tune systems

- Utilize content delivery networks (CDNs) to distribute content globally

- Minify and compress HTML, CSS, and JavaScript files

- Implement load balancing across multiple servers for improved resource utilization

By addressing these domain and hosting considerations, websites can enhance their technical SEO foundation, leading to improved search visibility and user experience.

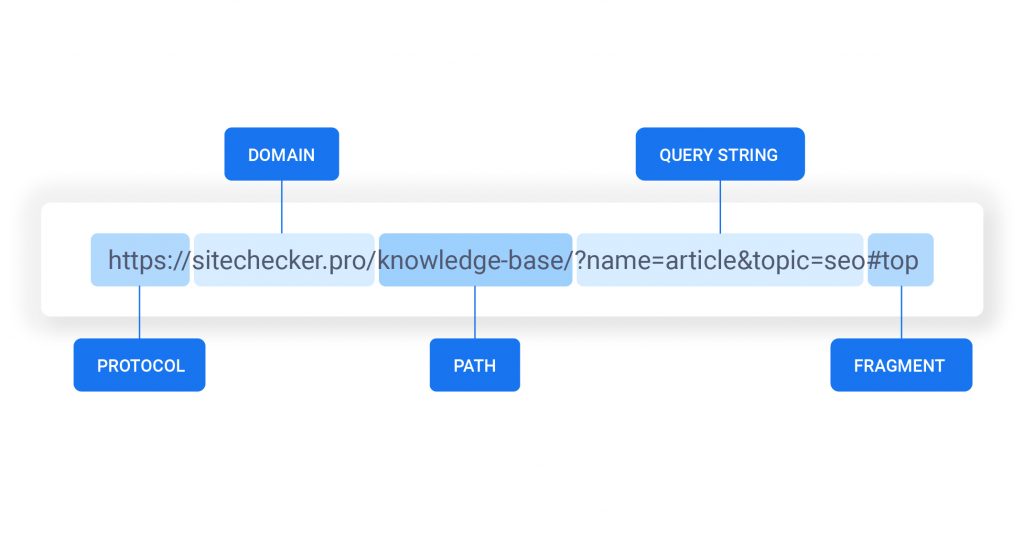

URL Structure Optimization

URL structure plays a crucial role in technical SEO, impacting both search engine crawling and user experience. When creating semantic slugs, follow these best practices:

- Use hyphens to separate words, not underscores or spaces

- Keep URLs short, descriptive, and relevant to the page content

- Include target keywords near the beginning of the URL

- Use lowercase letters only

- Avoid unnecessary parameters, dates, or numbers that may become outdated

For parameter handling and canonicalization:

- Use rel="canonical" link attributes to consolidate ranking signals to the preferred URL version

- Implement proper parameter handling in your sitemap, listing only canonical URLs

- Consider using rel="canonical" HTTP headers for non-HTML resources like PDFs or images

- Avoid using robots.txt or URL removal tools for canonicalization purposes

By implementing these URL structure best practices, you can improve your website's crawlability, reduce duplicate content issues, and enhance overall search engine visibility.

XML Sitemap Creation

XML sitemaps are crucial for ensuring search engines can efficiently crawl and index your website's content. Many content management systems (CMS) offer built-in sitemap generation capabilities or plugins that simplify the process 1.

For WordPress users, popular options include Yoast SEO and Google XML Sitemaps, while Joomla and Drupal have their own dedicated sitemap extensions. These plugins automatically create and update sitemaps as you add or modify content, ensuring search engines always have access to your latest pages.

Once generated, it's essential to validate your sitemap using Google Search Console. This tool allows you to submit your sitemap, check its status, and identify any crawling or indexing issues 3.

To do this, log into Search Console, navigate to the 'Sitemaps' section, enter your sitemap URL, and submit it for analysis. The console will provide a report on discovered URLs, their indexing status, and any errors encountered, helping you maintain an optimized sitemap for improved search visibility.

Optimizing Crawl Efficiency

Crawl efficiency management is crucial for optimizing how search engines discover and index your website's content. To improve crawl efficiency:

- Implement a flat site structure, keeping important pages within 2-3 clicks from the homepage

- Use HTTP/2 and server push to reduce latency and allow crawlers to process your site more efficiently

- Leverage XML sitemaps to guide search engines to important pages, updating them regularly

- Optimize internal linking to create clear pathways for crawlers and distribute link equity

- Manage your crawl budget by blocking low-value pages using robots.txt and returning proper status codes for removed content

By focusing on these strategies, you can ensure search engines crawl your most valuable content efficiently, leading to better indexation and potentially improved search rankings 3.

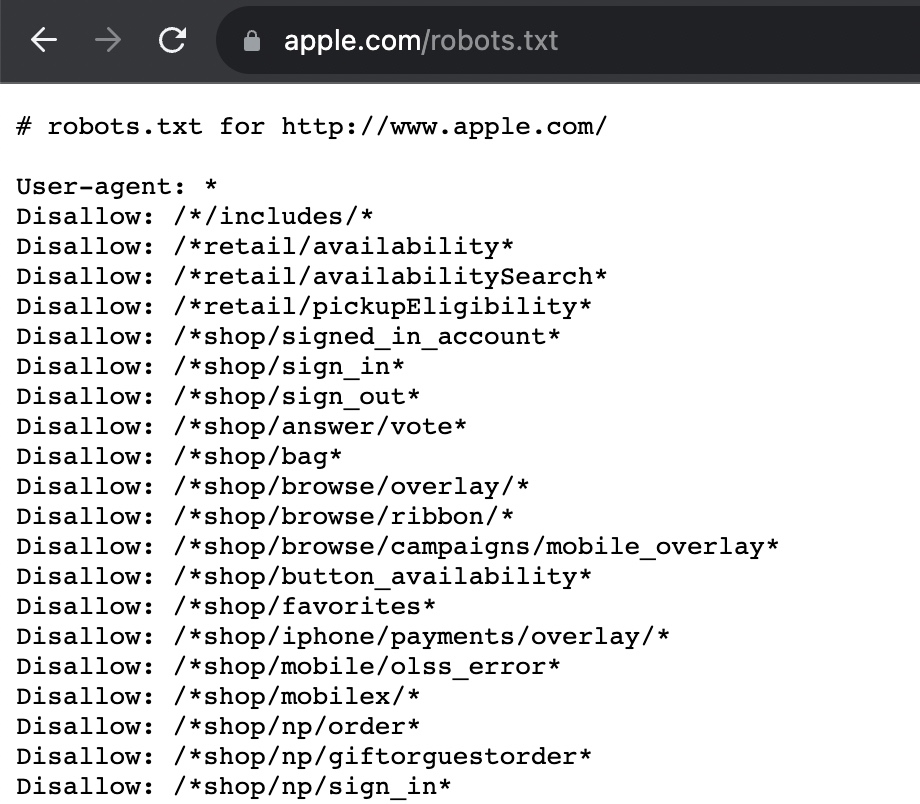

Robots.txt File Essentials

The robots.txt file is a crucial tool for controlling search engine access to your website. When configuring disallow directives for sensitive pages, use specific paths to prevent crawlers from accessing private content:

- Disallow: /admin/

- Disallow: /login/

- Disallow: /private/

However, be cautious not to overblock or make common mistakes that can harm your SEO efforts. One critical error to avoid is blocking CSS and JavaScript files, as this prevents search engines from properly rendering and understanding your pages. Instead of using broad directives like:

- Disallow: /scripts/

- Disallow: /styles/

Allow search engines to access these essential resources. Remember that robots.txt is case-sensitive, so ensure your directives match the exact URL paths. Additionally, avoid blocking URLs that are already set to NOINDEX, as this can lead to conflicting signals for search engines 3. By carefully configuring your robots.txt file, you can protect sensitive content while maintaining optimal crawlability and indexability for your website.

Redirect Strategy Essentials

When implementing redirects, it's crucial to choose between 301 (permanent) and 302 (temporary) redirects based on your specific needs. Use 301 redirects for permanent URL changes, such as site migrations, page relocations, or content consolidation 1.

These redirects signal to search engines that the move is permanent, allowing them to transfer ranking signals to the new URL. In contrast, 302 redirects are ideal for temporary changes, like website maintenance or short-term promotions.

To eliminate redirect chains, which can negatively impact SEO and user experience, follow these steps:

- Identify existing redirect chains using tools like Screaming Frog or online redirect checkers

- Update redirects to point directly from the old URL to the final destination URL

- Modify your .htaccess file or use a WordPress plugin to implement the changes

- Test the new redirects to ensure they work correctly

- Update internal links to point directly to the new URLs, avoiding potential redirect loops

By implementing an effective redirect strategy and eliminating redirect chains, you can improve your website's crawlability, preserve SEO value, and enhance overall user experience 3.

Indexation Control Techniques

Indexation control methods are crucial for managing how search engines interact with your website's content. The two primary approaches are encouraging and discouraging indexation, each with specific techniques:

To discourage indexation:

- Use the "noindex" meta robots tag to instruct search engines not to index specific pages

- Implement robots.txt directives to prevent crawling of certain URLs or directories

- Apply canonical tags to consolidate duplicate content and direct indexing to the preferred version

To encourage indexation:

- Ensure high-quality, unique content on pages you want indexed

- Use internal and external links to enhance visibility and authority of important URLs

- Implement self-referencing canonical tags to reinforce the canonical status of a page

It's important to note that robots.txt prevents crawling but doesn't guarantee non-indexation. For sensitive content, combine robots.txt directives with noindex tags or server-side controls 3. Regularly monitor indexation status using tools like Google Search Console to ensure your indexation control methods are effective 5.

Meta Robots Tag Strategy

Meta robots tags are powerful tools for controlling how search engines interact with your web pages. The "noindex" directive tells search engines not to include a page in their index, while "nofollow" instructs them not to follow links on that page 1. These tags are particularly useful for combating duplicate content issues and managing crawl budget.

Key use cases for noindex/nofollow tags include:

- Preventing indexation of low-value pages like search results, login pages, or admin areas

- Managing duplicate content on e-commerce sites with multiple product variations

- Controlling indexation of paginated content or archives

- Limiting crawl budget consumption on large sites with many similar pages

When implementing these tags, it's crucial to use them carefully. Overuse of noindex can potentially harm SEO by preventing valuable content from being indexed 3.

To combat duplicate content, combine noindex tags with canonical tags, redirects, or URL parameter handling to guide search engines to the preferred version of content 5. Always monitor the impact of noindex implementation using tools like Google Search Console to ensure critical pages remain indexed and visible in search results 6.

Mobile-First Optimization

Canonicalization techniques are essential for managing duplicate content and consolidating ranking signals. Self-referential canonicals serve as safety nets by indicating the preferred version of a page, even when no duplicates exist 1.

This practice helps prevent issues with URL variations like mixed case or www/non-www versions 2. Cross-domain canonicals, on the other hand, are valuable for managing content across multiple domains. They're particularly useful for syndicated content, allowing publishers to benefit from wider distribution while preserving SEO value for the original source 3.

For example, if you syndicate blog posts, using cross-domain canonicals pointing back to your main site ensures that search engines attribute the content's value to your domain, regardless of where it's republished 4. These techniques help consolidate link equity, improve crawl efficiency, and clarify content ownership for search engines 5.

Responsive Design Fundamentals

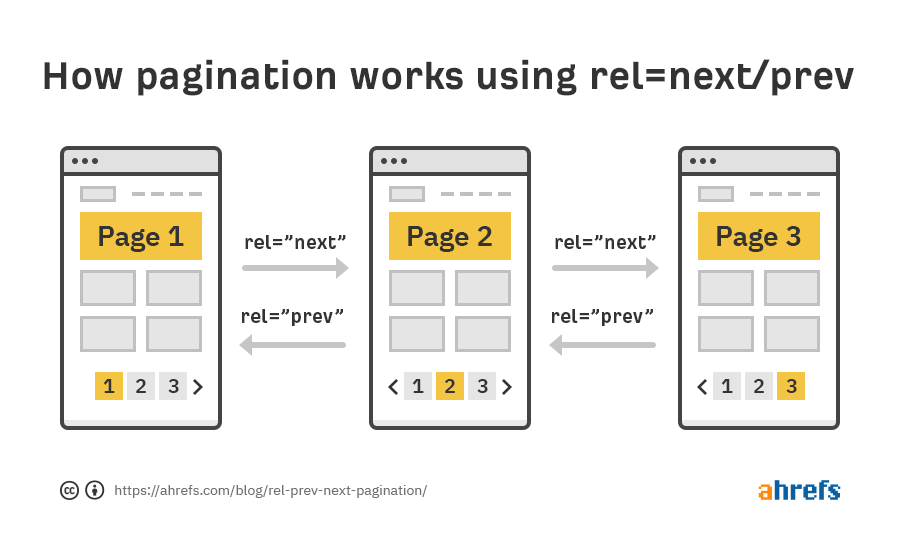

Implementing rel="next" and rel="prev" attributes is crucial for effective pagination in SEO. These attributes help search engines understand the relationship between pages in a sequence, improving crawling and indexing efficiency1. For proper implementation:

- Add rel="next" to the first page, pointing to the second page

- Use both rel="prev" and rel="next" on intermediate pages

- Include only rel="prev" on the last page

- Ensure these attributes are placed in the section of your HTML

When considering view-all pages, it's important to weigh the benefits against potential performance issues. If a view-all page exists, use a rel="canonical" tag on paginated pages pointing to it, but only if the view-all page loads quickly and provides a good user experience 1. For frequently updated content, such as blogs, maintain the standard pagination structure rather than relying solely on a view-all page to ensure proper indexation of new content 3.

Mobile Usability Testing

Mobile-first optimization is crucial in today's digital landscape, with Google prioritizing mobile-friendly websites through mobile-first indexing 1. To ensure your website is optimized for mobile devices:

- Implement responsive design to adapt content seamlessly across all screen sizes

- Optimize page speed by compressing images, minifying code, and leveraging browser caching

- Ensure touch-friendly navigation with appropriately sized buttons and menus

- Use legible fonts and maintain proper text formatting for easy reading on small screens

- Implement Accelerated Mobile Pages (AMP) for faster loading on mobile devices

- Test your site using Google's Mobile-Friendly Test tool and address any issues identified

By focusing on these mobile optimization strategies, you can improve user experience, reduce bounce rates, and potentially boost your search engine rankings in an increasingly mobile-centric world 8.

Mobile Usability Metrics

Mobile usability testing is crucial for ensuring optimal user experience and search engine performance. Google's Mobile-Friendly Test tool, now retired 1, has been replaced by more comprehensive tools like Lighthouse, which is integrated into Chrome DevTools 2. These tools assess various aspects of mobile usability, including responsiveness, touch element sizing, and text readability.

Core Web Vitals, a set of user-centric performance metrics, play a significant role in mobile optimization. The thresholds for a good user experience are: Largest Contentful Paint (LCP) within 2.5 seconds, Cumulative Layout Shift (CLS) less than 0.1, and Interaction to Next Paint (INP) under 200 milliseconds 3.

Meeting these thresholds is essential for both mobile and desktop experiences, as Google uses the same criteria across devices to simplify understanding and implementation 4.

AMP Alternatives

Progressive Web Apps (PWAs) have emerged as a powerful alternative to Accelerated Mobile Pages (AMP) for enhancing mobile web experiences. PWAs offer app-like functionality, including offline access and push notifications, while maintaining the accessibility of web pages 1. Unlike AMP's restricted HTML and CSS, PWAs allow for more engaging and dynamic content.

AMP pros include significantly faster loading times, improved SEO visibility, and lower bounce rates 3. However, AMP's limitations include creative constraints, restricted functionality, and potential maintenance overhead. PWAs, on the other hand, offer greater flexibility and functionality but may not match AMP's loading speed for static content 4.

Some developers are now integrating both technologies, leveraging AMP for initial fast loading and PWAs for richer experiences. This hybrid approach allows websites to start fast with AMP and transition to more dynamic PWA functionality, combining the benefits of both technologies 4.

Site Speed Optimization

Site speed optimization is crucial for improving user experience and search engine rankings. To enhance your website's performance:

- Minimize HTTP rquests by combining CSS and JavaScript files, and using CSS sprites for images

- Implement browser and server-side caching to reduce load times for returning visitors

- Optimize image sizes through compression and using modern formats like WebP

- Enable GZIP compression to reduce file sizes

- Utilize a Content Delivery Network (CDN) to distribute content globally

- Implement lazy loading for images and videos to prioritize above-the-fold content

- Use asynchronous loading for CSS and JavaScript files to prevent render-blocking

By implementing these strategies, you can significantly improve your website's loading speed, leading to better user engagement and potentially higher search engine rankings. Regular performance testing using tools like Google PageSpeed Insights or Lighthouse can help identify areas for ongoing optimization7.

Core Web Vitals Explained

Core Web Vitals are crucial metrics for assessing website performance and user experience. The three key metrics are Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) 1. To measure these metrics, several tools are available:

- Google PageSpeed Insights: Provides both lab and field data for Core Web Vitals

- Google Search Console: Offers a Core Web Vitals report based on real-world field data

- Web Vitals Chrome Extension: Displays lab and field data for LCP, FID, and CLS

- Chrome DevTools Performance Panel: Helps detect layout shifts and analyze Core Web Vitals

The choice between server-side rendering (SSR) and client-side rendering (CSR) significantly impacts Core Web Vitals. SSR generally offers faster initial load times and better SEO performance, as fully rendered pages are sent from the server 4.

CSR, while enabling rich interactivity, can lead to longer initial load times and potential SEO challenges, as content is rendered in the browser 5. Developers must carefully consider these trade-offs when optimizing for Core Web Vitals and overall user experience.

Image Optimization Strategies

Image optimization is crucial for improving website performance and SEO rankings. WebP and AVIF are modern image formats that offer superior compression compared to traditional formats like JPEG and PNG 2.

To implement WebP/AVIF conversion, use tools like Cloudinary's free online converter or WordPress plugins like Converter for Media 3. These tools can automatically convert images to the most efficient format based on the user's browser support 1.

Lazy loading is another essential technique for optimizing image delivery. It involves loading images only when they enter the viewport, reducing initial page load times 4. Implement lazy loading using the loading="lazy" attribute on image tags or leverage JavaScript libraries like Intersection Observer API for more advanced functionality 5.

When properly implemented, lazy loading can significantly improve page speed and user experience, contributing to better SEO performance 7.

Structured Data Implementation

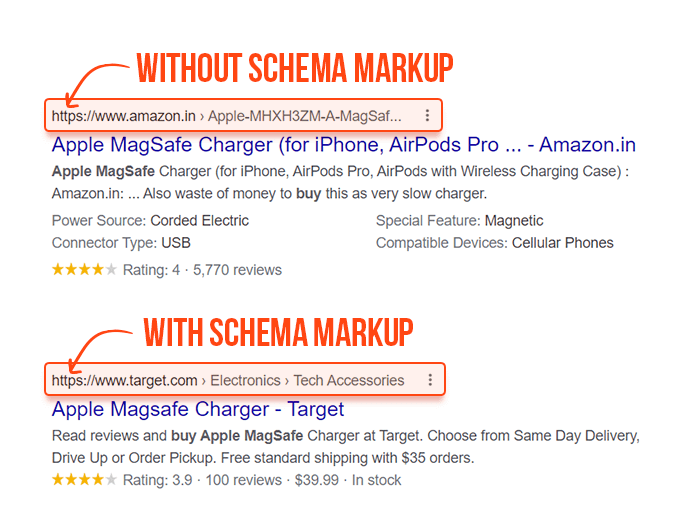

Structured data implementation is a crucial aspect of technical SEO that helps search engines better understand and interpret your website's content. By using schema markup, you can provide search engines with clear, machine-readable information about your pages, potentially leading to enhanced search results and improved visibility 1.

To implement structured data effectively:

- Choose the appropriate schema type for your content (e.g., Article, Product, FAQ)

- Use Google's Structured Data Markup Helper to generate the necessary code

- Implement the markup using JSON-LD format, which is Google's preferred method

- Test your implementation using Google's Rich Results Test tool

- Monitor performance in Google Search Console's Rich Results report

While structured data doesn't directly impact rankings, it can significantly improve click-through rates by making your search listings more attractive and informative 2. For example, implementing HowTo schema for instructional content or Product schema for e-commerce sites can lead to rich snippets that stand out in search results, potentially driving more targeted traffic to your website 5.

Schema Markup Formats

Schema markup is a standardized vocabulary used by search engines to better understand and interpret website content. While there are multiple formats for implementing schema markup, JSON-LD and Microdata are the most common. Here's a comparison of these two formats and how to test your implementation:

| Aspect | JSON-LD | Microdata |

|---|---|---|

| Implementation | Separate `` block in HTML | Embedded within HTML tags |

| Readability | Clean and easily readable | Can clutter HTML structure |

| Maintenance | Easier to update and troubleshoot | More complex to maintain |

| Google's Preference | Preferred and endorsed | Supported but not preferred |

| Complexity | Simpler and non-intrusive | More complex and intrusive |

JSON-LD is generally recommended due to its ease of use, readability, and strong support from Google 1. To test your schema markup implementation, use Google's Rich Results Test tool. This tool allows you to validate your structured data and confirm eligibility for rich results in Google Search 3. By inputting your URL or code snippet, you can ensure your schema markup is correctly implemented and optimized for search engine visibility.

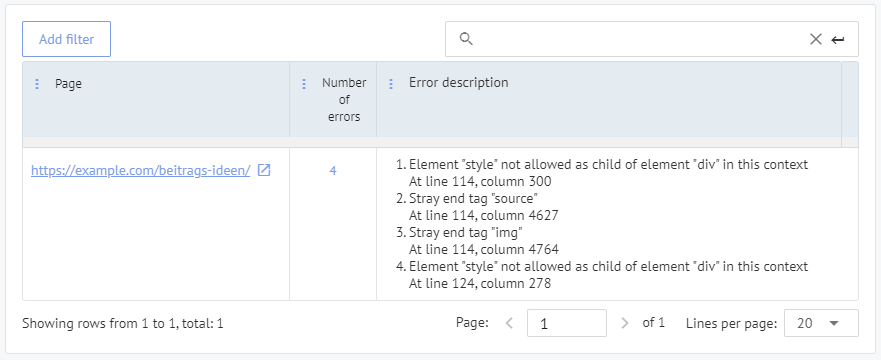

Fixing Markup Errors

Structured data errors can significantly impact your website's visibility in search results. Two common issues are data inconsistency warnings and missing required fields. Data inconsistency warnings occur when the information in your structured data doesn't match the visible content on your page. To resolve these, ensure that all schema properties accurately reflect the content users see on your website.

Missing required field errors are more critical and can prevent your content from being eligible for rich results 2. To fix these:

- Identify the required fields for your specific schema type using Google's Search Central documentation

- Add the missing properties to your markup

- Use Google's Rich Results Test tool to validate your changes

- If using dynamic content, ensure all required fields are populated correctly

Remember, while warnings suggest improvements, addressing errors is crucial for maintaining your site's SEO performance and eligibility for rich snippets 4.

Security Meets Accessibility

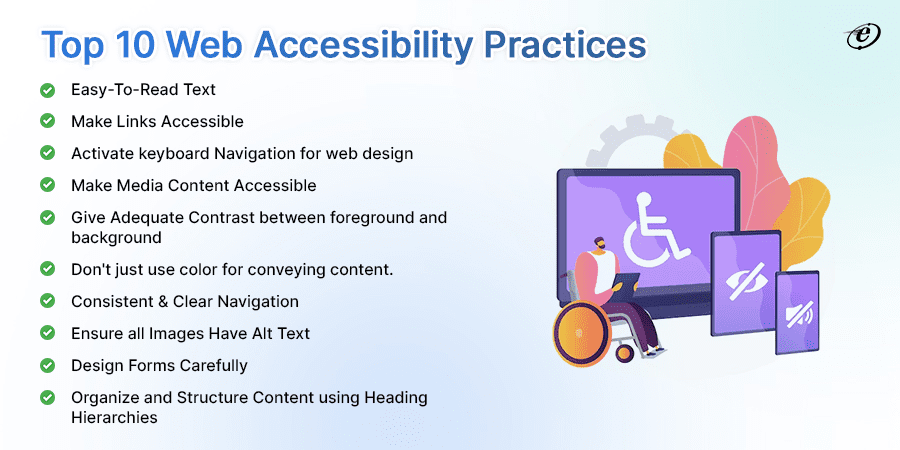

Web accessibility and cybersecurity are interconnected aspects of digital design that significantly impact user experience and data protection.

Accessible websites often incorporate features that enhance security, such as clear error messages and consistent navigation, which can help users avoid potential security pitfalls 1. However, accessibility features can also introduce unique security challenges if not implemented properly.

Key considerations for balancing security and accessibility include:

- Ensuring screen reader compatibility without compromising secure login processes

- Implementing keyboard accessibility for all functions, including security controls

- Providing alternative text for security-related images and CAPTCHAs

- Designing clear, understandable error messages for both security and accessibility purposes

- Regular automated and manual audits to identify both accessibility and security vulnerabilities

By prioritizing both accessibility and security in web design, organizations can create inclusive digital environments that protect all users, regardless of their abilities or assistive technology needs.

HTTPS Migration Essentials

When migrating to HTTPS, addressing mixed content errors and implementing HSTS preloading are crucial steps for ensuring a secure and seamless transition. To resolve mixed content issues:

- Identify mixed content using browser developer tools or online checkers

- Update all internal links, including images, scripts, and stylesheets, to use HTTPS

- For external resources, switch to HTTPS versions or host them locally if HTTPS is unavailable

- Implement Content Security Policy headers to upgrade insecure requests automatically

After resolving mixed content, implement HSTS preloading to enhance security:

- Add the Strict-Transport-Security header with a long max-age (e.g., 63072000 seconds)

- Include the 'includeSubDomains' and 'preload' directives in the header

- Gradually increase the max-age, starting with 5 minutes and progressing to 1 month

- Submit your domain to the HSTS preload list at hstspreload.org after thorough testing

By following these steps, you can ensure a robust HTTPS implementation that protects against downgrade attacks and provides a secure browsing experience for your users. when the joke doesn't hit

Accessibility Best Practices

WCAG compliance fundamentals include proper implementation of alt text and ARIA landmark roles to enhance web accessibility. For alt text, keep descriptions concise (under 150 characters) and relevant to the image's context 1. Avoid using phrases like "image of" or "photo of," as screen readers already identify elements as images. End alt text with a period for proper screen reader pausing.

ARIA landmark roles help structure web pages for easier navigation by assistive technologies 3. Key roles include:

role="main"for primary contentrole="navigation"for menus and linksrole="banner"for site-wide informationrole="search"for search functionalityrole="complementary"for supporting content

When using multiple instances of the same role, use aria-label to distinguish between them 5. Implementing these practices improves accessibility and user experience for all visitors, contributing to better WCAG compliance and potentially higher search engine rankings.

Security Header Configuration

Content Security Policy (CSP) is a crucial security measure that helps prevent various types of attacks, including cross-site scripting (XSS) and data injection. To implement CSP, add the following header to your server's HTTP response:

textContent-Security-Policy: default-src 'self'; script-src 'self' https://trusted-cdn.com;

This example allows content from your own domain and scripts from a trusted CDN. Customize the policy based on your site's needs, gradually tightening restrictions to avoid breaking functionality 3.

While the X-XSS-Protection header is deprecated in modern browsers, it's still useful for older ones. Implement it using:

textX-XSS-Protection: 1; mode=block

This setting enables XSS filtering and blocks page rendering if an attack is detected. For comprehensive protection, combine these headers with other security measures like HTTPS and regular security audits.

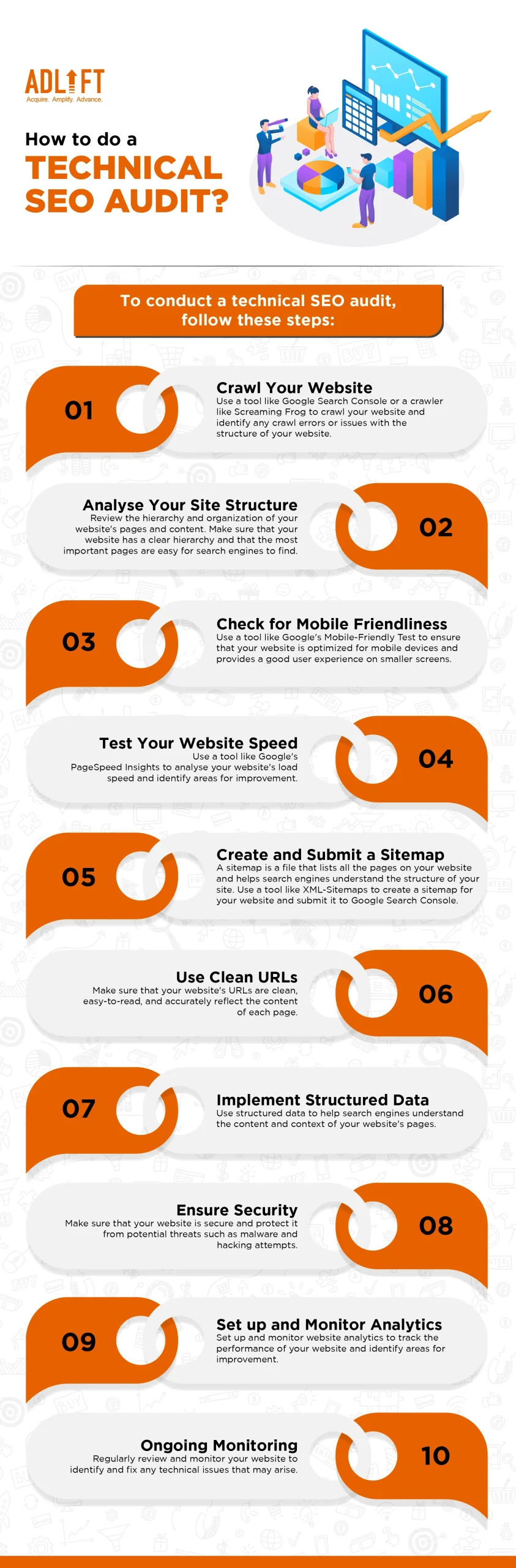

Technical Audit Essentials

Technical SEO audits are crucial for identifying and resolving issues that can impact a website's search engine visibility and performance. The process typically involves several key steps:

- Crawl the website using tools like Screaming Frog or SEMrush to identify structural issues, broken links, and duplicate content

- Analyze site speed and performance using Google PageSpeed Insights or GTmetrix

- Check mobile optimization and responsiveness across devices

- Review XML sitemaps and robots.txt files for proper configuration

- Examine URL structures, internal linking, and site architecture

- Verify HTTPS implementation and security headers

- Assess on-page elements like title tags, meta descriptions, and header tags

Prioritize issues based on their impact on search visibility and user experience. For example, addressing duplicate content or fixing crawl errors may take precedence over minor on-page optimizations 5. Regular audits, especially after major site changes or traffic drops, help maintain optimal technical SEO health and improve search engine rankings.

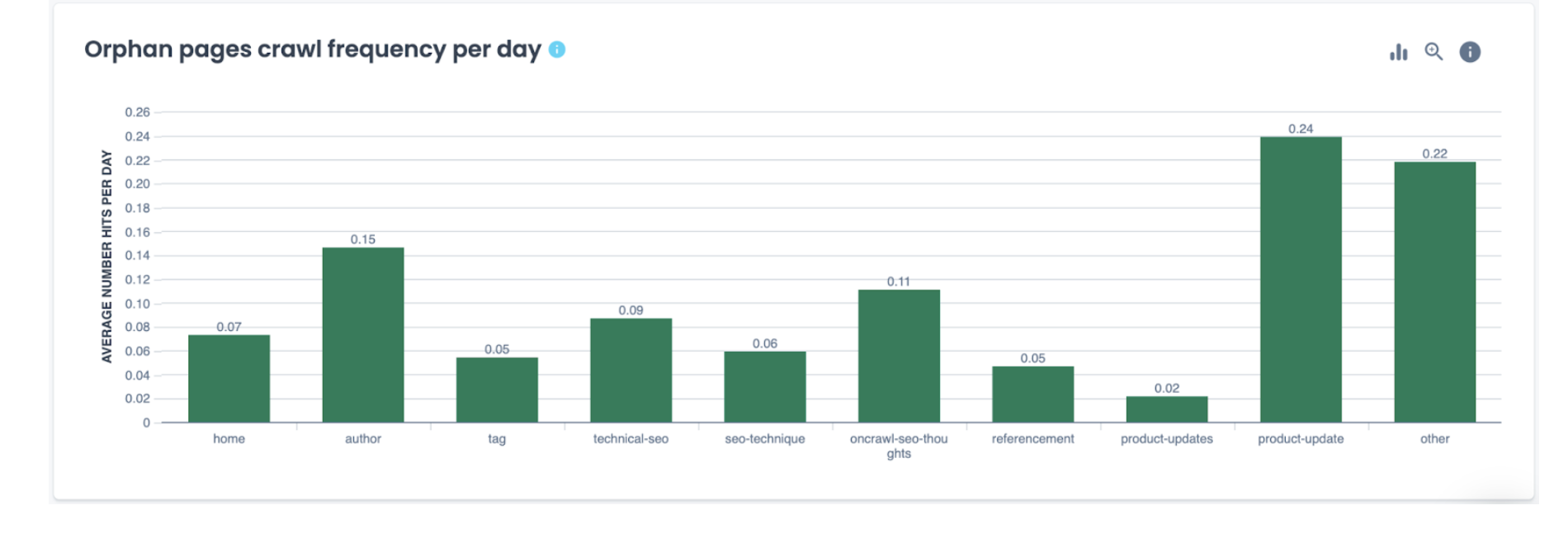

Crawl and Orphan Detection

Screaming Frog SEO Spider offers powerful configuration options to simulate crawls and identify orphaned pages effectively. To set up a custom configuration profile:

- Navigate to "Configuration > Spider > Crawl" in Screaming Frog

- Adjust settings like crawl speed, user agent, and crawl depth

- Save your custom configuration as a profile for future use

To identify orphaned pages:

- Enable "Crawl Linked XML Sitemaps" in the XML Sitemaps configuration

- Connect Google Analytics and Search Console in the API Access settings

- Start the crawl and let it complete

- Use the "Crawl Analysis" feature to process orphan URLs

- Filter results in the "Sitemaps," "Analytics," or "Search Console" tabs to view orphaned pages

This process helps uncover pages that exist but lack internal links, potentially impacting their discoverability and SEO performance. Regularly auditing for orphaned pages ensures optimal site structure and improves overall search engine visibility.

Search Console Indexing Overview

The Google Search Console Indexing tab provides valuable insights into how Google crawls and indexes your website. This feature allows webmasters to monitor their site's indexing status and identify potential issues affecting search visibility. The Indexing report is divided into several key sections:

- Pages: Shows the overall indexing status of your site's pages, including valid, warning, and error categories

- Sitemaps: Displays submitted sitemaps and their indexing performance

- Removals: Allows temporary removal of URLs from search results

To access the Indexing report, log into Google Search Console, select your property, and click on "Pages" under the "Indexing" section in the left-hand menu 3. The report provides a graph showing indexed pages over time and categorizes pages as "Valid," "Error," or "Excluded". This information helps identify indexing issues and prioritize fixes to improve your site's search performance.

For detailed analysis of specific URLs, use the URL Inspection tool within Search Console. This tool provides information about Google's indexed version of a page and allows you to test whether a URL is indexable 4. By regularly monitoring these reports, you can ensure your website maintains optimal visibility in Google search results.

Advanced SEO Techniques

Advanced technical SEO strategies focus on optimizing complex aspects of website performance and search engine interaction. One key strategy is implementing structured data markup using JSON-LD, which is Google's preferred format 1. This allows search engines to better understand and display rich snippets for your content, potentially improving click-through rates.

Another advanced technique is optimizing for Core Web Vitals, which are critical metrics for user experience. This includes improving Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) 2. Strategies to enhance these metrics include:

- Implementing lazy loading for images and videos

- Minimizing JavaScript execution time

- Optimizing server response times

- Ensuring proper resource prioritization

Additionally, leveraging server-side rendering (SSR) for dynamic content can significantly improve page load times and SEO performance, especially for large-scale websites 3. This approach allows search engines to crawl and index content more efficiently, potentially leading to better rankings for complex, content-rich sites.

International SEO Setup

Implementing hreflang tags is crucial for international SEO success. These tags help search engines serve the correct language version of your content to users based on their location and language preferences 1. To implement hreflang tags correctly:

- Use the appropriate language and region codes (e.g., "en-us" for English in the United States)

- Include self-referencing hreflang tags on each page

- Ensure reciprocal linking between all language versions

- Place tags in the HTML head or XML sitemap

While Google Search Console previously offered a Geographic Targeting feature, this option is no longer available 2. Instead, focus on clear URL structures (such as country-specific domains or subdirectories) and proper hreflang implementation to signal your intended geographic targets 3. For ccTLDs like .gg, Google automatically associates them with specific countries, and their geographic targeting cannot be overridden.

Googlebot Crawl Analysis

Log file analysis is a crucial technique for understanding how search engine bots, particularly Googlebot, interact with your website. By examining server logs, you can gain valuable insights into crawl patterns and optimize your site's visibility. Here's a breakdown of key aspects of log file analysis for SEO:

| Aspect | Description |

|---|---|

| Purpose | Examine server logs to understand Googlebot's behavior and site crawlability |

| Key Information | Time, date, IP address, response codes, user agent, requested files |

| Tools | Splunk, Loggly, Botify for processing and analyzing log data |

| Use Cases | Understand crawl behavior, verify business priorities, discover crawl budget waste |

To prioritize high-priority pages for crawling, feature them prominently on your site and use your XML sitemap strategically. While sitemaps don't directly influence page priority, they guide search engines to important content.

For large sites, consider using robots.txt to initially limit crawling of lower-priority areas, gradually opening them up as your site becomes established 5. Remember, log file analysis provides real data on Googlebot's behavior, helping you optimize crawl efficiency and improve your site's overall SEO performance.

Continuous SEO Upkeep

Ongoing SEO maintenance is crucial for sustaining and improving your website's search engine performance. Regular tasks include:

- Weekly technical health checks to ensure mobile responsiveness, site speed, and HTTPS security

- Monthly content audits to refresh underperforming pages and expand successful ones

- Quarterly backlink analysis to identify and disavow toxic links

- Annual comprehensive content audits to evaluate overall performance and set new goals

Implement minor adjustments regularly, such as optimizing meta descriptions and internal linking. Use tools like Google Search Console for ongoing performance tracking and error detection 3. Stay flexible and adapt your strategy based on algorithm updates and industry trends. By consistently maintaining your SEO efforts, you can ensure long-term growth and maximize your digital marketing ROI.

SEO Performance Monitoring Tools

Automated alert systems are crucial for proactive IT management, allowing teams to quickly respond to issues before they impact users. Tools like PagerDuty and Checkmk offer real-time monitoring and alerting capabilities, sending notifications via email, SMS, or integrations with ticketing systems when predefined thresholds are breached 1. These systems can monitor network uptime, bandwidth utilization, and device health, enabling IT teams to address problems swiftly.

Google Analytics custom reports provide valuable insights into website performance and user behavior.

To create a custom report, navigate to the Customization > Custom Reports section in Google Analytics, select metrics and dimensions relevant to your SEO goals, and choose a visualization type like Explorer or Flat Table 2. Custom reports can track specific KPIs, compare performance across different segments, and help identify areas for improvement in your SEO strategy 3.

By combining automated alerts with customized analytics reports, marketers can maintain a comprehensive view of their technical SEO performance and respond quickly to emerging issues or opportunities.

Deployment Safety Measures

Implementing robust update deployment protocols is crucial for maintaining website stability and performance. A key component of this process is utilizing a staging environment, which serves as a replica of the production environment for testing new code changes before deployment 1. This allows developers to identify and fix potential issues without affecting the live site.

Essential staging environment checks include:

- Unit testing to verify individual components

- Integration testing to ensure different parts of the system work together

- Performance testing to simulate high traffic loads

- User acceptance testing to confirm updates meet requirements 2

Alongside thorough testing, it's vital to have a well-defined rollback plan in place. This contingency strategy allows for swift reversion to a previous stable version if unforeseen issues arise post-deployment 3.

Key elements of an effective rollback plan include clearly defined triggers for initiating a rollback, detailed step-by-step instructions for executing the process, and strategies to minimize impact on users and data integrity 4. Regular reviews and updates of these protocols ensure they remain aligned with evolving business objectives and technological changes 4.

Algorithm Update Readiness

To prepare for Google algorithm updates, focus on adhering to Google's Webmaster Guidelines and analyzing historical traffic patterns. Google's guidelines emphasize creating high-quality, user-focused content, ensuring proper site structure, and avoiding deceptive practices 1. Key points include:

- Providing valuable, original content that answers user queries

- Implementing clear site navigation and mobile-friendly design

- Using descriptive, accurate page titles and meta descriptions

- Avoiding hidden text, cloaking, or other manipulative techniques

Analyzing historical traffic patterns helps identify trends and potential vulnerabilities to algorithm changes 2. Use tools like Google Analytics and Search Console to:

- Track organic traffic fluctuations over time

- Monitor keyword rankings and click-through rates

- Identify pages with sudden traffic drops or gains

- Assess the impact of previous algorithm updates on your site

By combining adherence to Google's guidelines with data-driven insights from historical analysis, you can better prepare for and adapt to future algorithm updates, maintaining or improving your site's search visibility 3.

Conclusion

Technical SEO is the foundation of a successful online presence, crucial for improving search engine visibility and user experience. By optimizing technical aspects such as site structure, speed, and mobile-friendliness, websites can significantly enhance their crawlability, indexability, and overall performance in search results12.

Key takeaways for effective technical SEO include:

- Regularly auditing and updating technical elements to stay aligned with search engine algorithms

- Focusing on core web vitals to improve user experience and search rankings

- Implementing structured data to enhance search result appearance and click-through rates

- Ensuring proper international SEO setup for global reach

- Maintaining ongoing vigilance through monitoring tools and update protocols

By prioritizing technical SEO, businesses can create a solid foundation for their digital marketing efforts, leading to improved organic traffic, higher conversions, and a competitive edge in the online marketplace 4. As search engines continue to evolve, staying proactive in technical optimization will be essential for long-term success in the digital landscape.